Preventing IP-based Drive-Bys in Apache

What’s the problem?

The Internet is a cesspool of script kiddies and automated bots, so I always recommend reducing the attack footprint on web servers as much as possible. Some day I’ll get into some additional defenses, but for today, here is something that will get rid of a huge amount of unwanted traffic.

If someone is going to attack my servers, I at least want them to have to be targeting me and my domain names instead of just blindly stumbling upon my server by its IP address. By default, most Apache and Nginx installations are configured to work off an IP or domain name in the Host Header so they’ll respond to just about any request!

While fixing this for HTTP sites is straightforward, I have not found any other guides for how to accomplish this with HTTPS sites so hopefully this post helps some sysadmins protect their web servers.

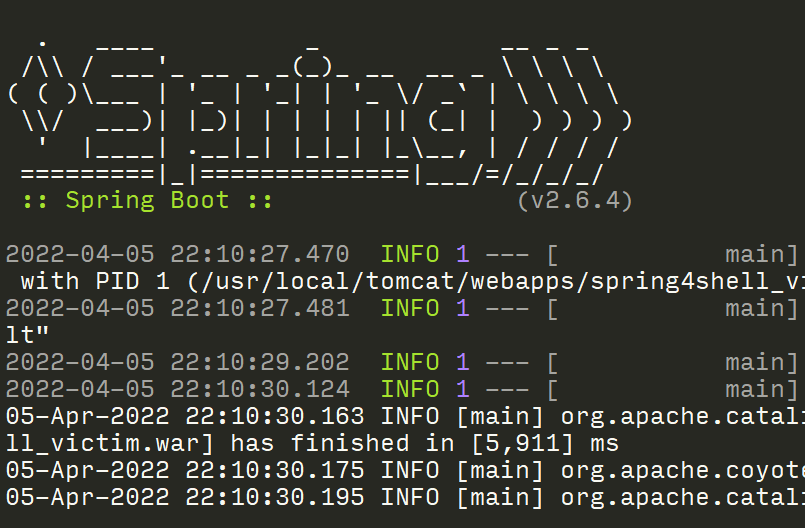

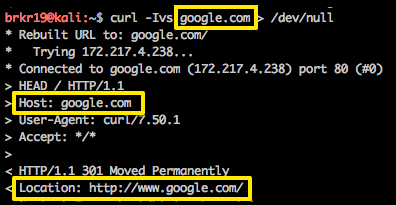

Let’s first take a look at a typical HEAD request for google.com.

In this example, notice the HOST: google.com part of the request, and then the Location: http://www.google.com/ in the reply. Pretty standard stuff here - we requested google.com and the server said to try again using www.google.com instead.

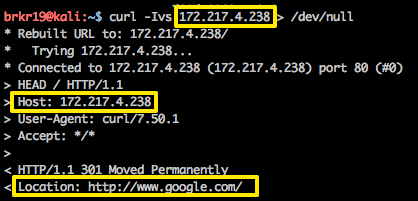

Now in this example, let’s say the attacker didn’t know what was hosted on this server and just randomly came across this IP address.

Here we see the Host: 172.217.4.238 header in the request, but the server still replies back with Location: http://www.google.com/. So the server just gave away that it’s hosting the www.google.com domain (well, let’s ignore that www.google.com resolves to another server due to their CDN configuration - the point remains).

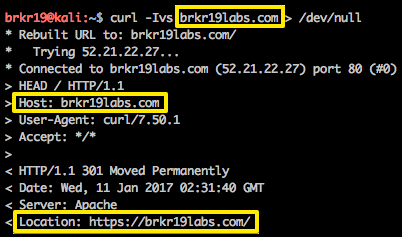

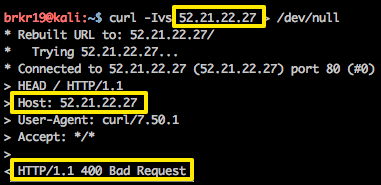

Now I’ll show an example from this server:

Of course, if you know my domain name and you’re requesting the HTTP version of the site, I will kindly forward you on the HTTPS version.

But what does it look like to someone that doesn’t know the domain name?

They get a generic 400 - Bad Request response. This is enough to stop most scans, and even if it doesn’t, there’s no site for the attackers to spider or fuzz for files and directories. This can greatly reduce the load on your server and also reduces your attack footprint greatly. Don’t believe me? Check out your web logs sometime and look at what host names are being requested - you might be surprised how many generic scanners are hitting you. Obviously this does not stop anyone from scanning your site if they know your domain name, but it will at least stop the most unsophisticated attackers and bots.

So what’s the solution?

Restricting access to Apache without the proper domain name is incredibly simple for HTTP sites, and only slightly more complicated for HTTPS sites. I provide some example Apache configurations below:

HTTP Sites

Simply structure your VirtualHosts such that there is a default VirtualHost to grab the IP-based requests (or requests for domain-names not hosted on this server - again you might be surprised at the garbage you will find in your web logs!). More info on the RewriteRule flags can be found here: https://httpd.apache.org/docs/current/rewrite/flags.html.

$ cat /etc/apache2/sites-enabled/000-default.conf<VirtualHost 10.0.0.100:80>

DocumentRoot /var/www/html

LogLevel info

ErrorLog ${APACHE_LOG_DIR}/error.log

CustomLog ${APACHE_LOG_DIR}/access.log combined

RewriteEngine on

RewriteRule ^/?(.*) [G,L]

</VirtualHost>Then have your more-specific VirtualHost for your site (use whatever settings make sense for your site, this is just a sample). The key is setting the ServerName and ServerAlias so requests with the proper Host Header get funneled through this configuration.

$ cat /etc/apache2/sites-enabled/example.com.conf<VirtualHost 10.0.0.100:80>

ServerName example.com

ServerAlias www.example.com

DocumentRoot /var/www/html

LogLevel info

ErrorLog ${APACHE_LOG_DIR}/example_com_error.log

CustomLog ${APACHE_LOG_DIR}/example_com_access.log combined

RewriteEngine on

RewriteCond %{HTTP_HOST} !^www\.example\.com [NC]

RewriteRule ^/?(.*) http://www.example.com/$1 [L,R,NE]

<Directory /var/www/html>

Options FollowSymLinks

AllowOverride All

Require all granted

</Directory>

</VirtualHost>HTTPS Sites

Things get slightly more complicated with HTTPS sites since Apache has to deal with the SSL handshake on top of figuring out which VirtualHost configuration to use. There are many good guides to hosting several different sites with different SSL certificates on one IP, so I won’t get into all that here. The important thing to understand is how SNI works ( https://wiki.apache.org/httpd/NameBasedSSLVHostsWithSNI). We’re going to take advantage of the ability to turn off strict SNI host checking to create a fall-back option for clients that don’t support SNI (none worth caring about in my opinion) or more importantly, for clients that are requesting a web page over SSL without setting the proper Host Header.

First, we will need to create a self-signed certificate to use for our catch-all default VirtualHost. Since this is only going to be seen by bots and scanners, we don’t have to care about it being self-signed and for an invalid host.

$ openssl genrsa -out /tmp/whatareyoudoinghere.localhost.key 2048

$ openssl req -new -x509 -key /tmp/whatareyoudoinghere.localhost.key \

-out /tmp/whatareyoudoinghere.localhost.crt -days 3650 \

-subj /CN=whatareyoudoinghere.localhost

$ sudo mkdir /usr/local/certs

$ sudo mv whatareyoudoinghere.* /usr/local/certs

$ sudo chown www-data:www-data -R /usr/local/certs

$ sudo chmod 440 /usr/local/certs/*Now we just need to add a new VirtualHost like we did for the HTTP site, but this time with port 443 specified and a few more lines for the cert locations:

$ cat /etc/apache2/sites-enabled/000-default-443.conf<VirtualHost 10.0.0.100:443>

DocumentRoot /var/www/html

LogLevel info

ErrorLog ${APACHE_LOG_DIR}/error.log

CustomLog ${APACHE_LOG_DIR}/access.log combined

SSLCertificateFile /usr/local/certs/whatareyoudoinghere.localhost.crt

SSLCertificateKeyFile /usr/local/certs/whatareyoudoinghere.localhost.key

RewriteEngine on

RewriteRule ^/?(.*) [G,L]

</VirtualHost>Again, just like with the HTTP site, you need a more-specific VirtualHost for your site (use whatever settings make sense for your site, this is just a sample). This example uses Let’s Encrypt, because you really should give them a shot!

$ cat /etc/apache2/sites-enabled/example.com-443.conf<VirtualHost 10.0.0.100:443>

ServerName example.com

ServerAlias www.example.com

DocumentRoot /var/www/html

LogLevel info

ErrorLog ${APACHE_LOG_DIR}/example_com_error.log

CustomLog ${APACHE_LOG_DIR}/example_com_access.log combined

SSLCertificateFile /etc/letsencrypt/live/example.com/cert.pem

SSLCertificateKeyFile /etc/letsencrypt/live/example.com/privkey.pem

Include /etc/letsencrypt/options-ssl-apache.conf

SSLCertificateChainFile /etc/letsencrypt/live/example.com/chain.pem

<Directory /var/www/html>

Options FollowSymLinks

AllowOverride All

Require all granted

</Directory>

</VirtualHost>Simply restart or reload Apache and you should be all set!

The results

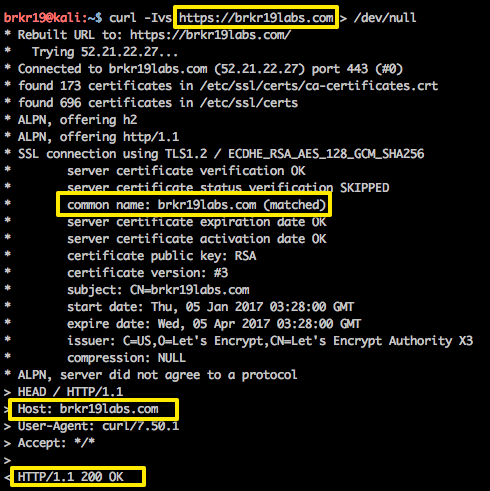

Let’s see what happens for a normal request to the HTTPS version of my site now:

You can see with a valid Host Header, Apache is performing the SSL handshake with my Let’s Encrypt-signed certificate and returning a 200 OK HTTP response.

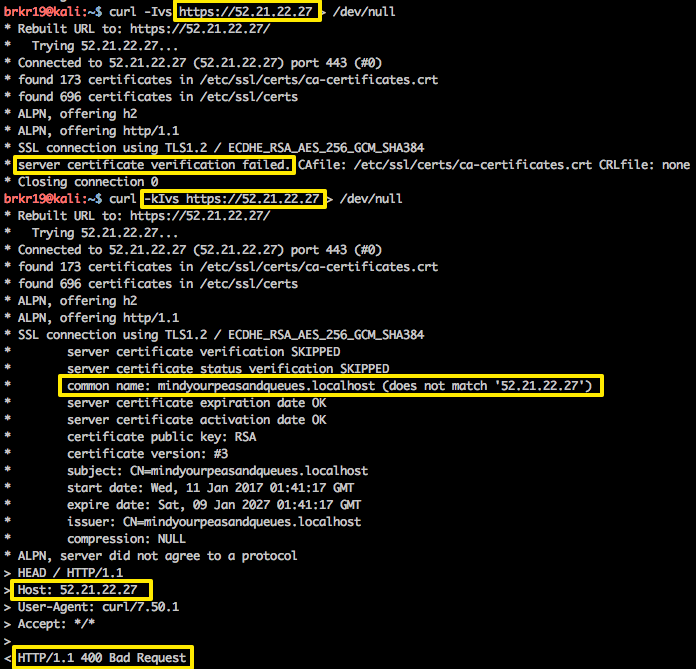

Let’s try again but with just the IP address as the Host Header this time:

First off, the normal request is failing due to the invalid certificate (a combination of not being signed by a trusted authority and a common name mismatch). This might stop many scans and bots right here, but just in case they are set to ignore certificate errors, we can simulate that with the -k flag in curl. There we can see that once the invalid certificate is ignored, we still end up with a 400 Bad Request from the server. It’s important to use a certificate that is NOT for your domain so an attacker scraping the SSL certificate can’t obtain your domain name and simply update the scan.

So with a few simple commands to create a self-signed cert and one additional default VirtualHost, we are able to stop at least some unwanted traffic from hitting the server. This is by no means ‘securing’ the site, but at least it makes recon and discovery a bit harder.

Credits

- We'd like to give a special shout-out to the IPew project for the software that created the tongue-in-cheek Pew Pew map at the top of this page!

Let us know what you think

Please share this post if you found it useful and reach out if you have any feedback or questions!

Big Breaks Come From Small Fractures.

You might not know how at-risk your security posture is until somebody breaks in . . . and the consequences of a break in could be big. Don't let small fractures in your security protocols lead to a breach. We'll act like a hacker and confirm where you're most vulnerable. As your adversarial allies, we'll work with you to proactively protect your assets. Schedule a consultation with our Principal Security Consultant to discuss your project goals today.